Dataflow Configuration

1. Introduction

In Sharemind HI data is compartmentalized into topics and algorithms are moved into tasks. Task inputs are either stakeholder uploads or outputs from another task. Task outputs might be accessible to certain stakeholders, and also might be available to another task as input. In the current version of Sharemind HI the whole dataflow must reside in one processor.

The Dataflow Configuration (DFC) is the basis for access controls in a Sharemind HI deployment. It describes which stakeholders and which analysis code are part of a specific deployment and how data moves between the parties.

The dataflow configuration contains the following:

-

A collection of tasks which as a whole form the solution.

-

The fingerprints of the tasks that perform the analysis.

-

User identities (certificates) of the related stakeholders as well as their capabilities.

-

A dataflow graph serving as an access control list for data provided by clients and data created by tasks.

-

Task running permissions

This way the dataflow configuration defines also three roles

-

Input Provider – can upload inputs for at least one topic.

-

Output Consumer – can download outputs from at least one topic.

-

Runner – can start execution of at least one task.

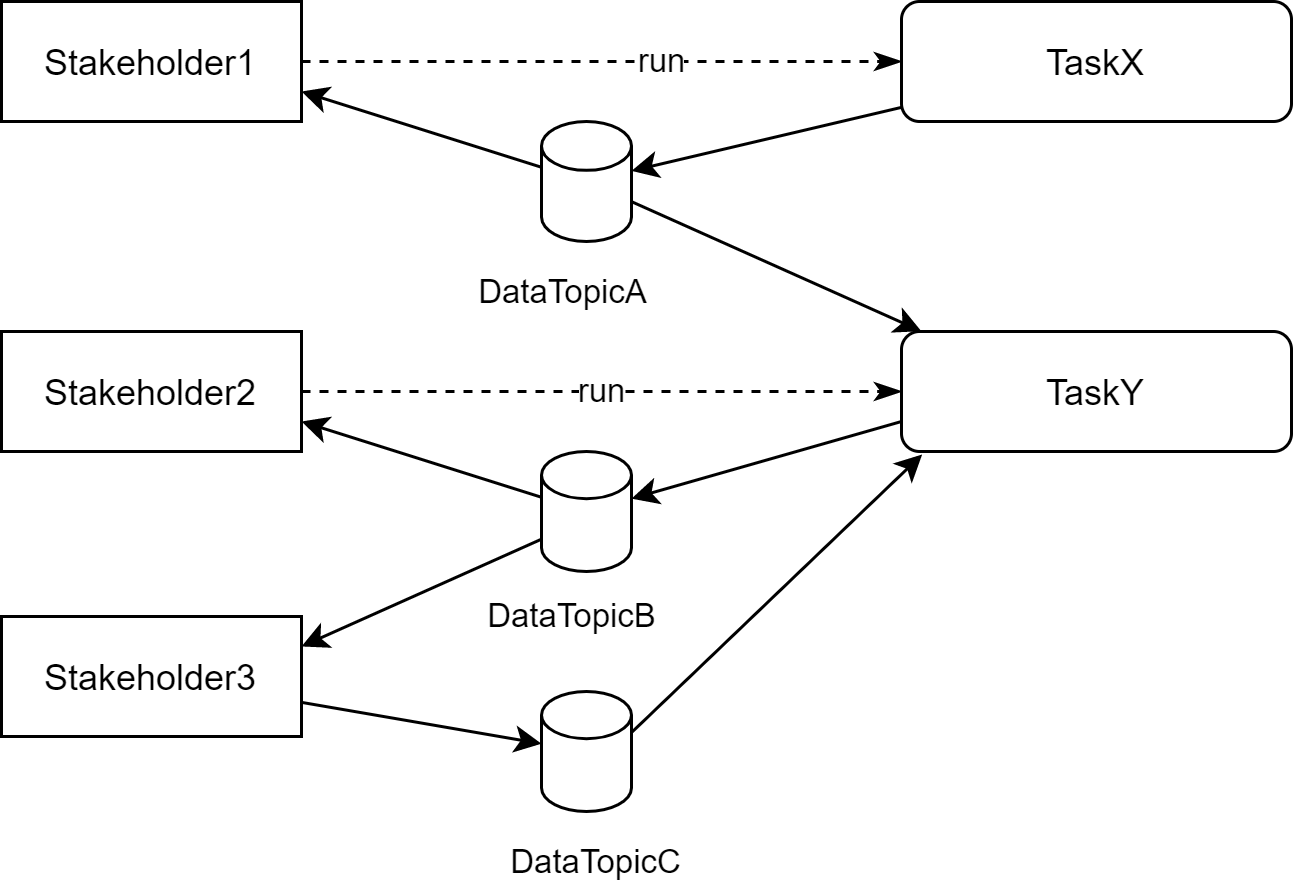

This can be imagined as a graph like the following example:

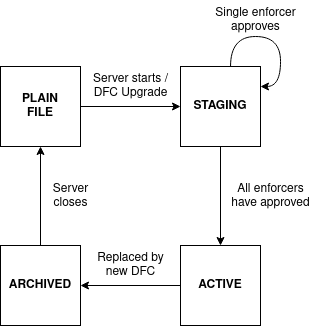

2. Lifecycle of the dataflow configuration

The DFC file is read once when the core enclave starts with a fresh state, i.e. no previous state file could be found. During the deployment of a Sharemind HI instance, all enforcers must approve the staging DFC, before any analysis can be run. The DFC can be updated through the DFC Upgrade procedure.

3. Dataflow configuration file format

The dataflow configuration is formatted as a YAML file. The file should contain the following entries:

- Stakeholders:

-

Stakeholder configuration. A list of all static stakeholders, each with the following properties.

- Name: "client1"

-

A short name for this stakeholder, at most 64 bytes long. Used within the dataflow configuration to reference this stakeholder to assign certain roles. Independent of the Common Name in the certificate.

- CertificateFile: "client1.crt"

-

Client certificate used for identification on the Sharemind HI Server. The path is relative to the dataflow configuration file (if not an absolute path). The roles granted to this client in this dataflow configuration also need to be assigned in the client certificate. If the certificate has the CA extension set, then they can assign end-entity roles (for the creation of dynamic end-users). However, the CA stakeholder itself is only allowed to be enforcer or auditor. The Certificate setup page provides instructions for creating certificates.

- RecoveryPublicKeyFile: "client1-recovery.pub"

-

If present, this stakeholder needs to participate in future recovery from backups operations.

- Auditors:

-

A list of stakeholder names. Declares, who will be able to download the audit log key and thus audit the system.

- Enforcers:

-

A list of stakeholder names. Declares, whose responsibility it is to validate the dataflow configuration and send their approval before the analysis can be run.

- End-Entity-Roles:

-

Configuration of roles for dynamic end users.

- Name: "ee-consumers"

-

The name of an end-entity role. This needs to be encoded in the X509 certificates, which can only name a single role. Creating end-entity certificates is described in the Certificate Setup page. This name can be used in the task runner, topic producer and topic consumer lists. X509 V3 Oid:

1.3.6.1.4.1.3516.17.1.1.2 - Assigned-By:

-

The name of a stakeholder whose certificate has the CA extension.

- Tasks:

-

Task configuration. Contains a list of tasks, each with the following properties. Each task represents one task enclave that may be run, read data from topics or write data to topics.

- Name: "sample_task"

-

Name of the task. The name must match an enclave name in the list configured in the server configuration.

- EnclaveFingerprint: "0123456789ABCDEF0123456789ABCDEF0123456789ABCDEF0123456789ABCDEF"

-

Task enclave fingerprint (a hash of the enclave code and related parameters). The fingerprint should be provided by the auditor after building the task enclaves.

- SignerFingerprint: "0123456789ABCDEF0123456789ABCDEF0123456789ABCDEF0123456789ABCDEF"

-

Task enclave signer fingerprint (a hash of the public key of the enclave signing key pair). Multiple enclaves signed by the same Independent Software Vendor (ISV) have the same signer fingerprint.

- DataRetentionTime: 0s

-

Time after which the metadata of the task invocation will be deleted. This meta information includes data about the task runner, arguments, what outputs were produced, error messages on failure.

A value of0sdisables the data retention feature.

The time format is the same as in the server configuration. Milliseconds are ignored.

Note: The encrypted output data itself is not deleted. This is managed by the data retention time of the specific topic itself.

Note: Time is untrusted in the enclave. A malicious server administrator can arbitrarily modify the time. - Runners:

-

A list of stakeholder names that are allowed to run this task.

- Topics:

-

Topic configuration. A list of topics, each with the following properties.

- Name: "input1"

-

Name of the topic. This name is used in the data upload, download and delete actions, as well as in the task enclave to read and write persistent data.

- DataRetentionTime: 0s

-

Time after which the metadata and encrypted data of uploaded data in the topic will be automatically deleted from the server.

A value of0sdisables the data retention feature.

The time format is the same as in the server configuration. Milliseconds are ignored.

Note: Time is untrusted in the enclave. A malicious server administrator can arbitrarily modify the time. - Producers:

-

A list of stakeholder names and task names that are allowed to upload / write data to this topic.

- Consumers:

-

A list of stakeholder names and task names that are allowed to download / read data from this topic.

- Options (optional):

-

A list of options for handling the topic state.

-

TriggerStateSaveOnUpload (optional) - Triggers the manual saving of the state and the uploaded data is only visible after the state was saved.

Use this if there would arise consistency problems if the data was lost due to a server failure. By using this option, the data will still be available when restarting the server after a failure (but only if the data retention policy does not delete it).

-

4. Example dataflow configuration

Stakeholders:

- Name: client1

CertificateFile: "client1.crt"

- Name: CA

CertificateFile: "CA_client.crt"

End-Entity-Roles:

- Name: end-entity-role-1

Assigned-By: CA

Auditors:

- client1

- CA

Enforcers:

- client1

Tasks:

- Name: sample_task

EnclaveFingerprint: "0123456789ABCDEF0123456789ABCDEF0123456789ABCDEF0123456789ABCDEF"

SignerFingerprint: "0123456789ABCDEF0123456789ABCDEF0123456789ABCDEF0123456789ABCDEF"

Runners:

- client1

Topics:

- Name: input1

Producers:

- end-entity-role-1

Consumers:

- sample_task

- Name: output1

Producers:

- sample_task

Consumers:

- client1

Options: ["TriggerStateSaveOnUpload"]